As described in the previous two posts, Smooth Streaming is Microsoft’s implementation of HTTP-based adaptive streaming, which is a hybrid media delivery method. It acts like streaming, but is in fact based on HTTP progressive download. The HTTP downloads are performed in a series of small chunks, allowing the media to get easily and cheaply cached along the edge of the network, closer to the end users. Providing multiple encoded bitrates of the same media source also allows Silverlight clients to seamlessly and dynamically switch between bitrates depending on network conditions and CPU power. The resulting end user experience is one of reliable, consistent playback without stutter, buffering or “last mile” congestion. In one word: Smooth.

In this post we’ll take a closer look at how Smooth Streaming works: format, server, and client.

Smooth Streaming Format

Smooth Streaming is the first Microsoft media format in over a decade to use a file format other than ASF. It is based on the ISO/IEC 14496-12 ISO Base Media File Format specification, better known as the MP4 file specification. Why MP4 and not ASF? Well, there are several reasons:

- MP4 is a lightweight container format with less overhead than ASF

- MP4 is easier to parse in managed (.NET) code than ASF

- MP4 is based on a widely used standard, making 3rd party adoption and support more straightforward

- MP4 was architected with H.264 video codec support in mind, and we’re counting on H.264 support in Smooth Streaming and Silverlight 3 (ASF can also contain H.264 video, but it’s not as straightforward as with MP4)

- MP4 was designed to natively support payload fragmentation within the file

There are actually 2 parts to the Smooth Streaming format: the wire format, and the disk file format. In Smooth Streaming a video is recorded in full length to the disk as a single file (one file per encoded bitrate), but it’s transfered to the client as a series of small file chunks. The wire format defines the structure of the chunks that get sent by IIS to the client, whereas the file format defines the structure of the contiguous file on disk. Fortunately, the MP4 specification allows MP4 to be internally organized as a series of fragments, which means that in Smooth Streaming the wire format is a direct subset of the file format.

What are these MP4 “fragments” that I speak of? The basic unit of an MP4 file is called a “box.” These boxes can contain both data and metadata. The MP4 specification allows for various ways to organize data and metadata boxes within a file. In most media scenarios it is considered useful to have the metadata written before the data so that a player client application can have more information about the video/audio it’s about to play before it plays it. However, in live streaming scenarios it is often not possible to write the metadata upfront about the whole data stream because it’s simply not fully known yet. Furthermore, less upfront metadata means less overhead, which can lead to shorter startup times. For these reasons the MP4 ISO Base Media File Format specification was designed to allow MP4 boxes to be organized in a fragmented manner, where the file can be written “as you go” as a series of short metadata/data box pairs, rather than one long metadata/data pair. The Smooth Streaming file format heavily leverages this aspect of the MP4 file specification, to the point where at Microsoft we often interchangeably refer to Smooth Streaming files as “Fragmented MP4 files” or “(f)MP4.”

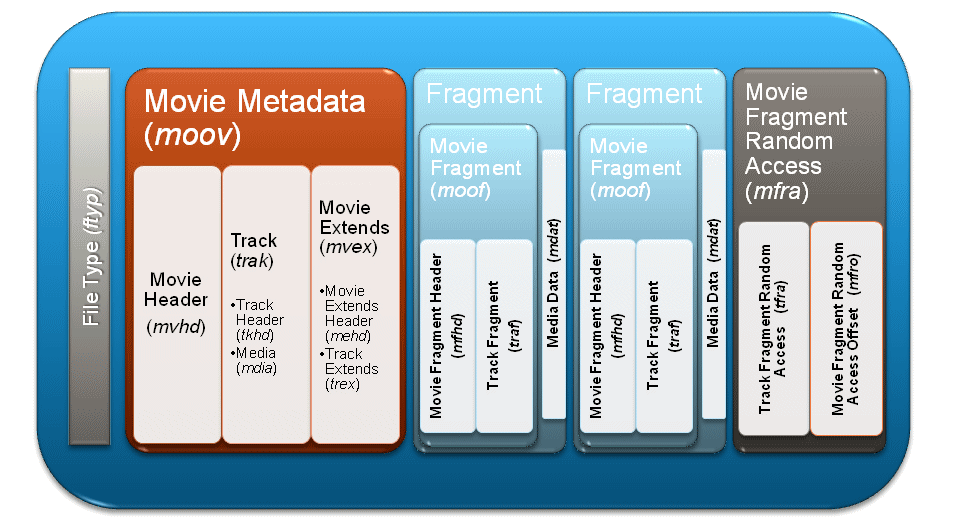

Here is a high-level overview of what a Smooth Streaming file looks like on the inside:

In a nutshell, the file starts with file-level metadata (‘moov‘) which generically describes the file, but the bulk of the payload is actually contained in the fragment boxes which also carry more accurate fragment-level metadata (‘moof‘) and media data (‘mdat‘). (The diagram only shows 2 fragments, but a typical Smooth Streaming file has a fragment per every 2 seconds of video/audio.) Closing the file is a ‘mfra‘ index box which allows easy and accurate seeking within the file.

When a Silverlight client requests a video time slice from the IIS Smooth Streaming server, the server simply seeks to the approriate starting fragment in the MP4 file and then lifts the fragment out of the file and sends it over the wire to the client. This is why we refer to the fragments as the “wire format.” This technique greatly enhances the efficiency of the IIS server because it requires no remuxing or rewriting overhead.

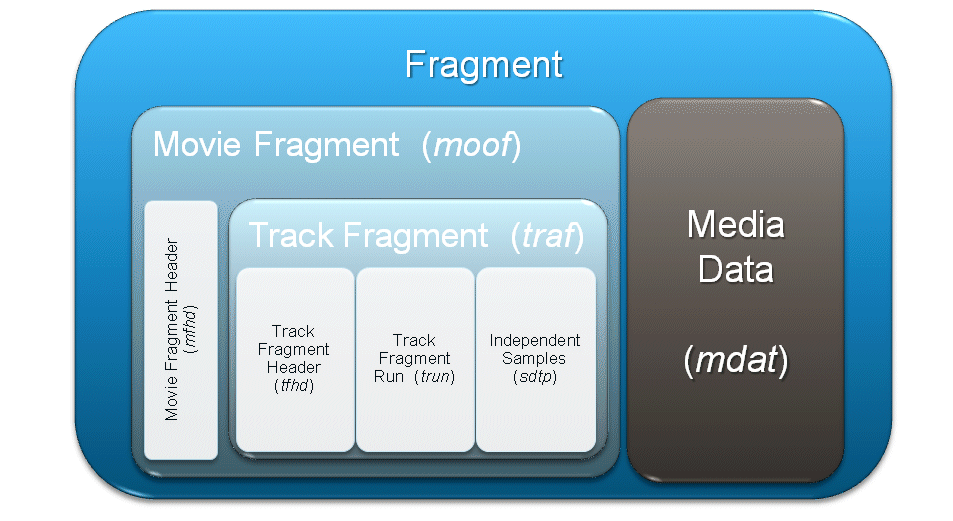

Here is what an MP4 fragment looks like in more detail:

We say that the Smooth Streaming format is based on the MP4 file format because even though we’re following the ISO specification, we specify our own box organization schema and some custom boxes. In order to differentiate Smooth Streaming files from “vanilla” MP4 files, we use new file extensions: *.ismv (video+audio) and *.isma (audio only). I keep forgetting to ask the IIS Media team what the acronyms exactly stand for, but my best guess would be “IIS Smooth Streaming Media Video (Audio)”.

Smooth Streaming Media Assets

A typical Smooth Streaming media asset therefore consists of the following files:

- MP4 files containing video/audio

- *.ismv – contains video and audio, or only video

- 1 ISMV file per encoded video bitrate

- *.isma – contains only audio

- In videos with audio, the audio track can be muxed into an ISMV file instead of a separate ISMA file

- *.ismv – contains video and audio, or only video

- Server manifest file

- *.ism

- Describes the relationships between media tracks, bitrates and files on disk

- Only used by the IIS Smooth Streaming server – not by client

- Client manifest file

- *.ismc

- Describes to the client the available streams, codecs used, bitrates encoded, video resolutions, markers, captions, etc.

- It’s the first file delivered to the client

Both manifest file formats are based on XML. The server manifest file format is based specifically on the SMIL 2.0 XML format specification.

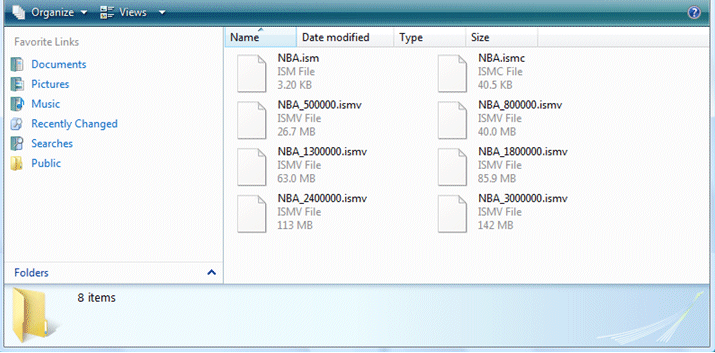

A folder containing a single Smooth Streaming media asset might look something like this:

In this particular case the audio track is contained in the NBA_3000000.ismv file.

Smooth Streaming Manifest Files

The Smooth Streaming Wire/File Format specification defines the manifest XML language as well as the MP4 box structure. Because the manifests are based on XML they are highly extensible. Among the features already included in the current Smooth Streaming format specification is support for:

- VC-1, WMA, H.264 and AAC codecs

- Text streams

- Multi-language audio tracks

- Alternate video and audio tracks (i.e. multiple camera angles, director’s commentary, etc.)

- Multiple hardware profiles (i.e. same bitrates targeted at different playback devices)

- Script commands, markers/chapters, captions

- Client manifest Gzip compression

- URL obfuscation

- Live encoding and streaming

For an example of a Smooth Streaming On-Demand Server Manifest file, see here.

For an example of a Smooth Streaming Client Manifest file, see here.

Smooth Streaming Playback: Bringing It All Home

Microsoft’s adaptive streaming prototype (used for NBC Olympics 2008) relied on physically chopping up long video files into small file chunks. In order to retrieve the chunks for the web server, the player client simply needed to download files in a logical sequence: 00001.vid, 00002.vid, 00003.vid, etc.

As I’ve explained in this and previous posts, Smooth Streaming uses a more sophisticated file format and server design. The videos are no longer split up into thousands of file chunks, but are instead “virtually” split up into fragments (typically 1 fragment per video GOP) and stored within a single contiguous MP4 file. This implies two significant changes in server and client design too:

- The server must be able to translate URL requests into exact byte range offsets within the MP4 file, and

- The client can request chunks in a more developer-friendly manner, such as by timecode instead of by index number

The first thing a Silverlight client requests from the Smooth Streaming server is the *.ismc client manifest. The manifest tells it which codecs were used to compress the content (so that the Silverlight runtime can initialize the correct decoder and build the playback pipeline), which bitrates and resolutions are available, and a list of all the available chunks and either their start times or durations.

With IIS7 Smooth Streaming, a client is expected to request fragments in the form of RESTful URLs:

http://video.foo.com/NBA.ism/QualityLevels(400000)/Fragments(video=610275114) http://video.foo.com/NBA.ism/QualityLevels(64000)/Fragments(audio=631931065)

The values passed in the URL represent encoded bitrate (i.e. 400000) and the fragment start offset (i.e. 610275114) expressed in an agreed-upon time unit (usually 100 ns). These values are known from the client manifest.

Upon receiving a request like this, the IIS7 Smooth Streaming component looks up the quality level (bitrate) in the corresponding *.ism server manifest and maps it to a physical *.ismv or *.isma file on disk. It then goes and reads the appropriate MP4 file, and based on its ‘tfra’ index box figures out which fragment box (‘moof’ + ‘mdat’) corresponds to the requested start time offset. It then extracts the said fragment box and sends it over the wire to the client as a standalone file. This is a particularly important part of the overall design because the sent fragment/file can now be automatically cached further down the network, potentially saving the origin server from sending the same fragment/file again to another client requesting the same RESTful URL.

As you can see, requesting chunks of video/audio from the server is easy. But what about dynamic bitrate switching that makes adaptive streaming so effective? This part of the Smooth Streaming experience is implemented entirely in client-side Silverlight application code – the server plays no part in the bitrate switching process. The client-side code looks at chunk download times, buffer fullness, rendered frame rates, and other factors – and based on them decides when to request higher or lower bitrates from the server. Remember, if during the encoding process we ensure that all bitrates of the same source are perfectly frame aligned (same length GOPs, no dropped frames), then switching between bitrates is completely seamless – and Smooth.

In my next blog post: Encoding For Smooth Streaming

Pingback: IIS 7 Smooth Streaming Beta launched « John Deutscher

Pingback: Expression Encoder : IIS Smooth Streaming server component beta released

Pingback: Nigel Parker's Outside Line : IIS Smooth Streaming Available Now

Pingback: Hiroshi Okunushi's Blog ?? : ?IIS7? IIS7 ??????????????????????3?

Pingback: Progressive download CPU consumption « Maxim Fridental

Pingback: Ezequiel Jadib’s Blog » Live Smooth Streaming: How-to: Start, Stop & Shutdown a Publishing Point Programmatically

Pingback: Silverlight smooth streaming and HTTP « Sharovatov’s Weblog

Pingback: Silverlight’s smooth Streaming – Increases the Popularity « Revealing the secret of RIA World

Pingback: Maxim Fridental » Blog Archive » Progressive download CPU consumption

Pingback: Scott Hanselman's Computer Zen - Installing and Setting Up and Encoding for IIS 7 Smooth Streaming and Silverlight

Pingback: Developit » Installing and Setting Up and Encoding for IIS 7 Smooth Streaming and Silverlight

Pingback: links for 2011-09-24 « Donghai Ma